Introduction

Article demonstrates Azure Data Factory template to copy data from AWS S3 to Azure Storage. This template deploys a connection between Amazon S3 bucket and Azure storage, to pull data and insert the files and folders into Azure Storage account. This pipeline can be easily customized to accommodate a wide variety of additional sources and targets.

The steps below will show how to modify Azure Data Factory template to copy data from AWS S3 to Azure Blob storage.

Pre-Requisites

- The AWS login must be a root user.

- Prepare a storage account on Azure portal.

- Prepare Azure Data factory resource on Azure portal.

- Should have Amazon S3 bucket.

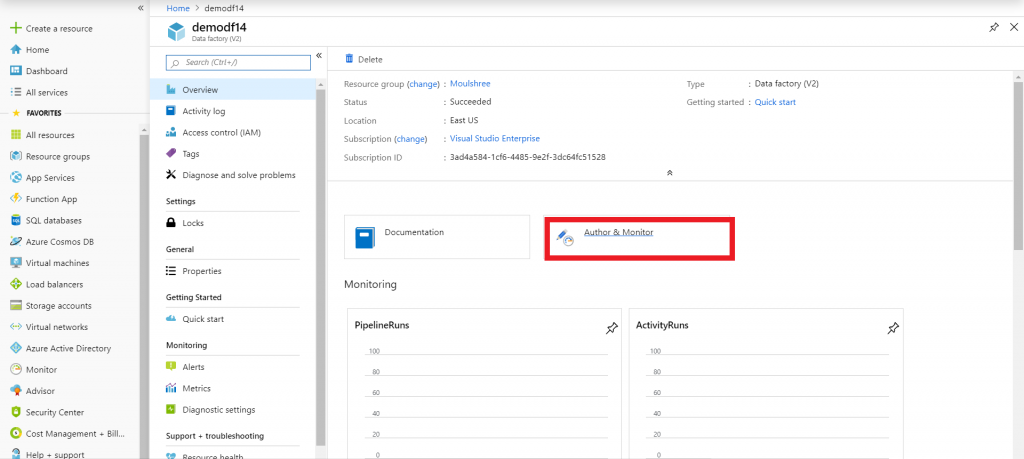

Step 1 : Launch Azure Data factory resource on Azure portal.

Click “Author & Monitor”. See the image below :

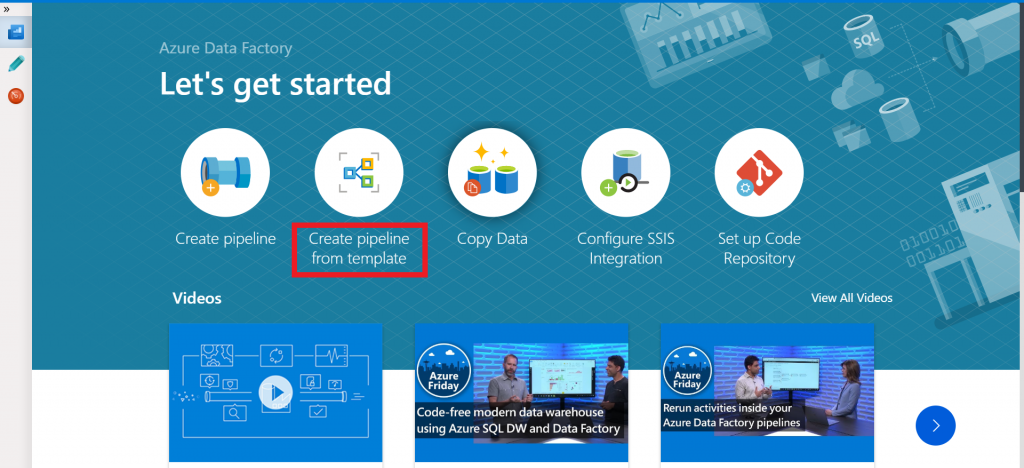

Step 2 : Select “Create pipeline from template”.

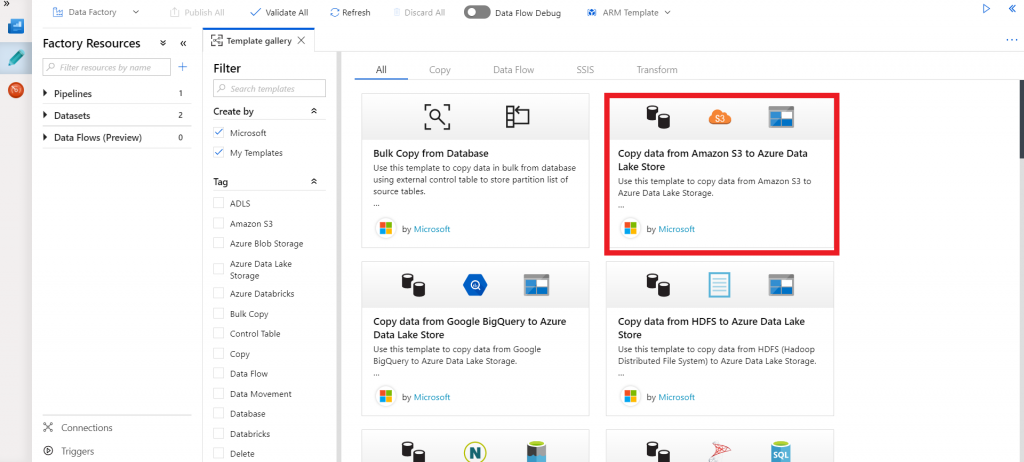

Step 3 : Select “Copy data from Amazon S3 to Azure Data Lake Store”.

Then click “Use this Template”.

Note : In this article we are inserting data into Azure Blob Storage.

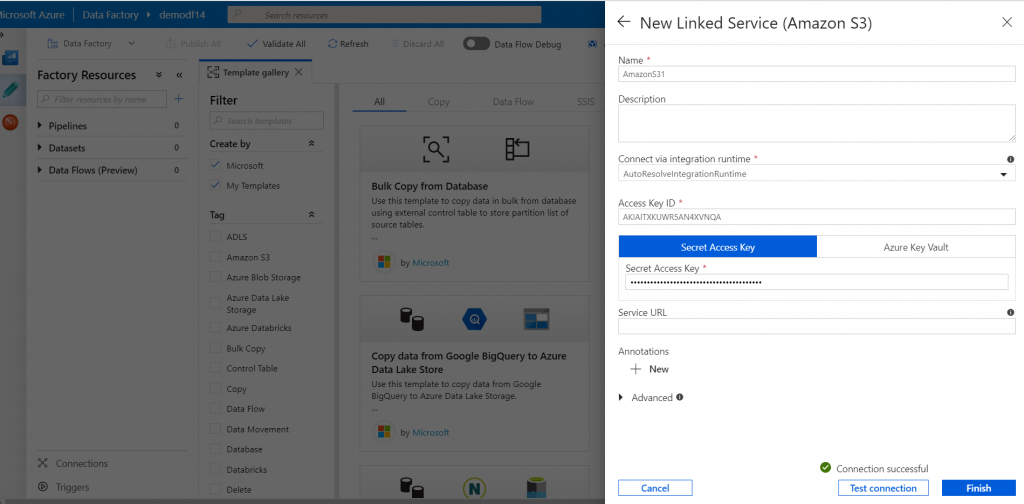

Step 4 : Input the Name of the Linked Service name and description as per requirement.

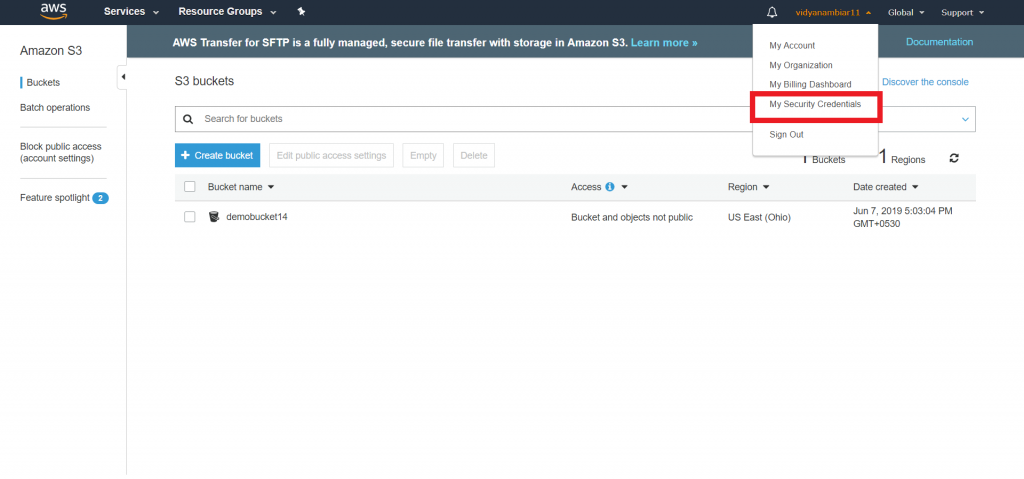

Step 5 : Go to “My Security Credentials” in your AWS portal.

Step 6 : Under Access Keys, Click on “Create New Access Key”.

You will be prompted to download a file which contains the connection Access Key ID and Secret Access Key. Click on “Download Key File”.

Enter the Access Key Id and Secret Access key into the Azure Data factory Linked Service Panel. Click “Finish”.

Step 7 : Your pipeline will be visible in the ADF portal and you will be prompted to specify the name of the bucket. In this case, click on the top right corner to hide the panel. Next, click on the pipeline, all the source and sink options will be accessible to you.

Step 8 : Click “edit” in the source panel and enter the S3 bucket path/folder.

Step 9 : Click “edit” in the sink panel and enter the folder path to the Azure storage account.

Step 10 : Go to the Pipeline panel and validate the pipeline by clicking “Validate” on the top bar.

Step 11 : Click “Debug” to run your pipeline. You can also click on monitor button on the left most panel to view your pipeline status.

Check your Azure blob storage, all the files have now been copied.

To update the blob storage with new data, just re-run the pipeline.

Conclusion

Azure Data factory Templates is a great way to start building your data transfer/transformation workflows. with custom logic. Let’s explore ADF pipelines from Templates!

Interested in Microsoft Azure, Let’s CONNECT!