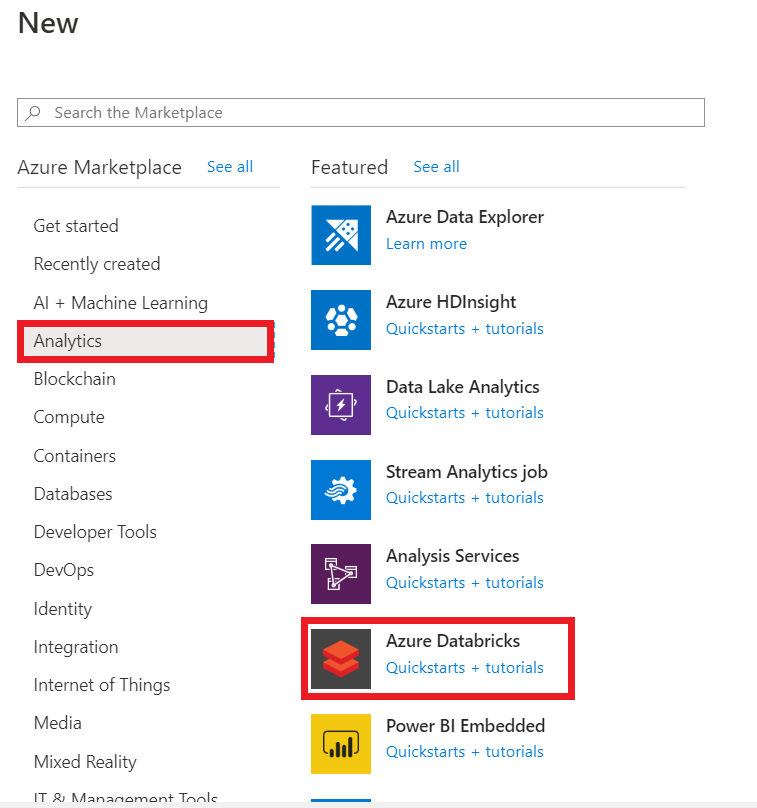

Azure Databricks is a fast and collaborative Apache Spark based analytics platform on Azure. The interactive workspaces and streamlines workflows enable data scientists, data engineers and business analyst to work on big data with ease to extract, transform and load data. Integration with variety of data sources and services like Azure SQL data warehouse, Azure Cosmos DB, Azure Data lake, Azure blob storage, Event hub and Power BI. Azure Databricks provides a secure and scalable environment with Azure Active directory integration, role-based access, machine learning capabilities, reduced cost combined with fully managed cloud platform.

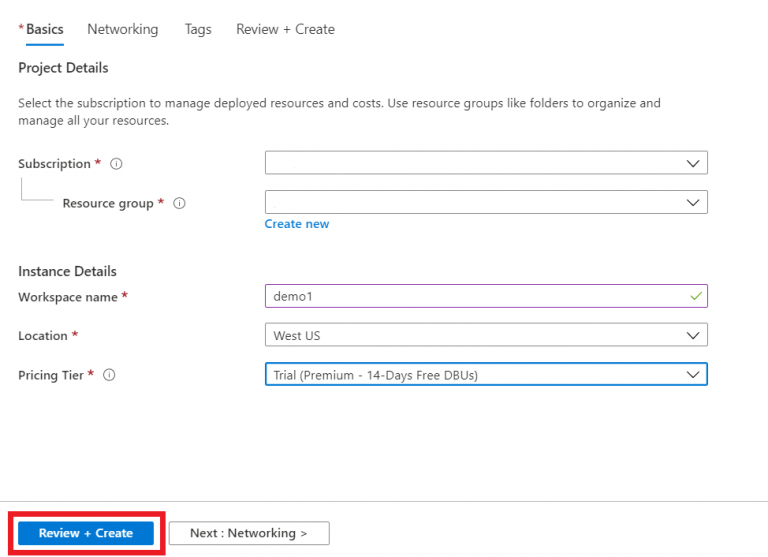

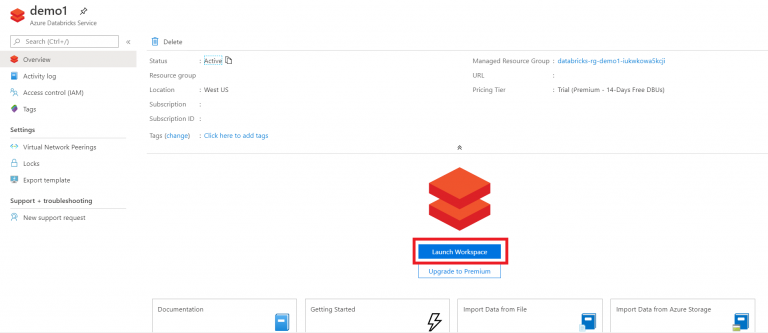

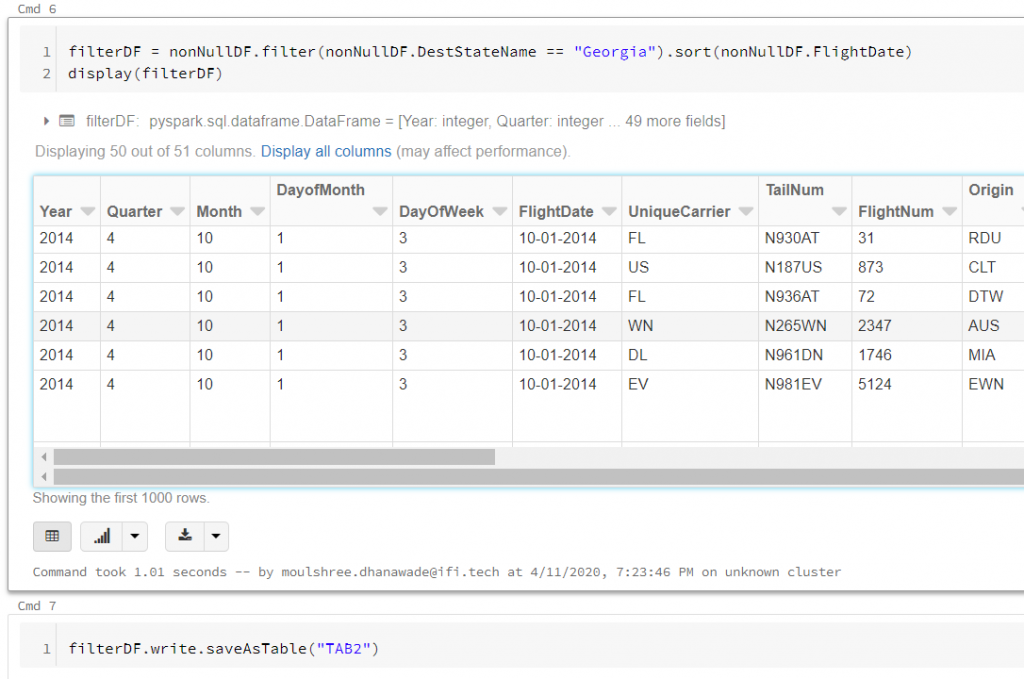

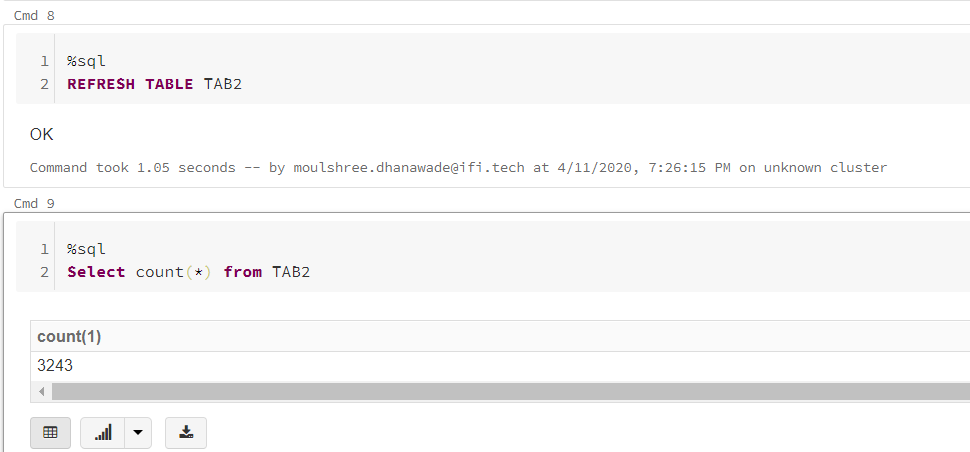

This document will help create an end to end Databricks solution to load, prepare and store data.